|

Yuxing Long | 龙宇星 I am a first-year PhD candidate in Center on Frontiers of Computing Studies (CFCS) at Peking University, advised by Prof. Hao Dong. Before this, I obtained my Bachelor's and Master's degrees from Beijing University of Posts and Telecommunications (BUPT). My research interests include robot manipulation and embodied navigation. Email: longyuxing [at] stu.pku.edu.cn |

|

Publications |

|

RealAppliance: Let High-fidelity Appliance Assets Controllable and Workable as Aligned Real Manuals

Yuzheng Gao*, Yuxing Long*, Lei Kang, Yuchong Guo, Ziyan Yu, Shangqing Mao, Jiyao Zhang, Ruihai Wu, Dongjiang Li, Hui Shen, Hao Dong Arxiv Paper / Project The first appliance digital assets controllable and workable as aligned real manuals. |

|

NavSpace: How Navigation Agents Follow Spatial Intelligence Instructions

Haolin Yang*, Yuxing Long*, Zhuoyuan Yu, Zihan Yang, Minghan Wang, Jiapeng Xu, Yihan Wang, Ziyan Yu, Wenzhe Cai, Lei Kang, Hao Dong Arxiv Paper / Project The first benchmark and model for navigation spatial intelligence. |

|

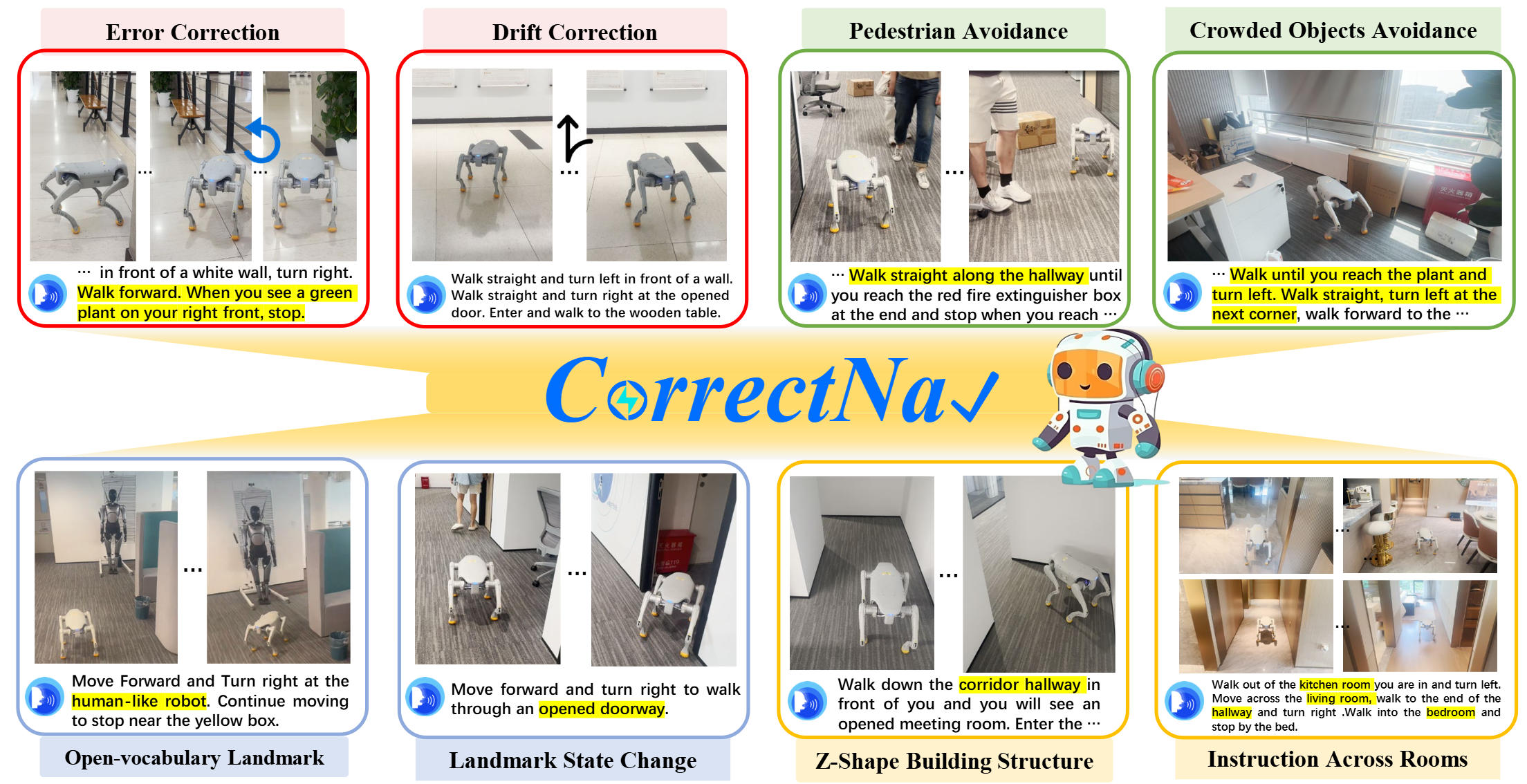

CorrectNav: Self-Correction Flywheel Empowers Vision-Language-Action Navigation Model

Zhuoyuan Yu*, Yuxing Long*, Zihan Yang, Chengyan Zeng, Hongwei Fan, Jiyao Zhang, Hao Dong AAAI Conference on Artificial Intelligence 2026 Paper / Project New SOTA Performance on R2R-CE and RxR-CE |

|

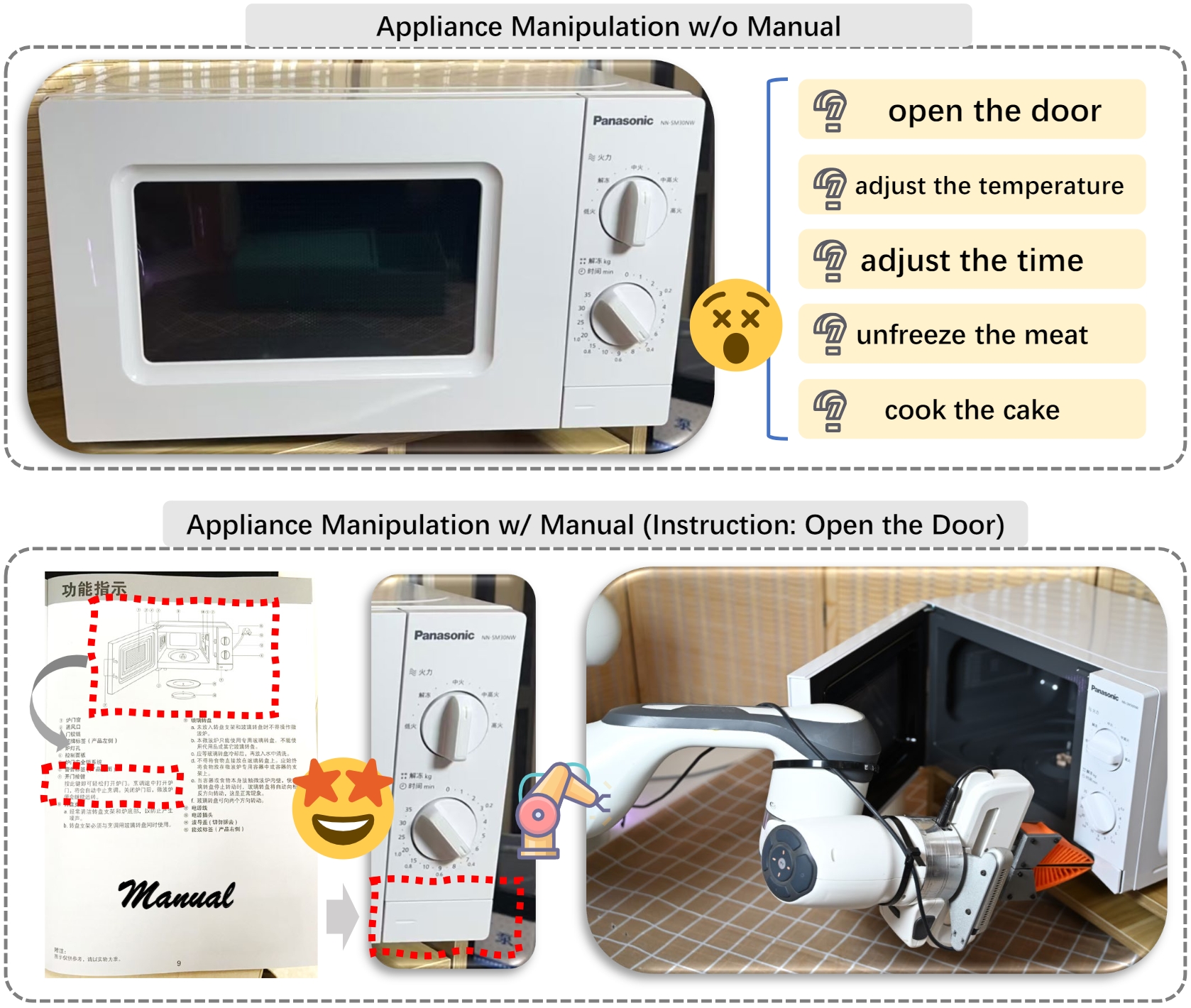

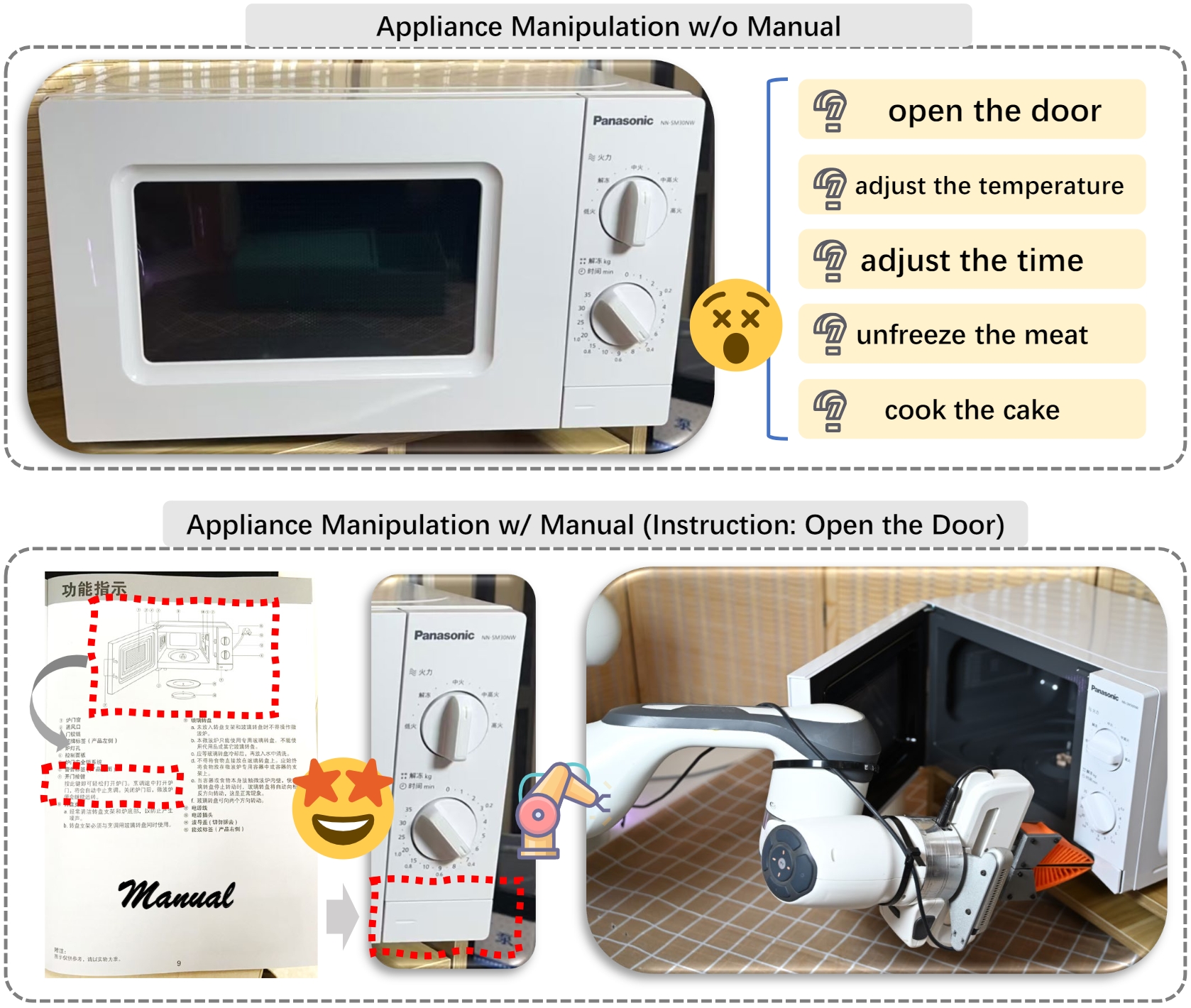

CheckManual: A New Challenge and Benchmark for Manual-based Appliance Manipulation

Yuxing Long, Jiyao Zhang, Mingjie Pan, Tianshu Wu, Taewhan Kim, Hao Dong Conference on Computer Vision and Pattern Recognition (CVPR) 2025 (Highlight) Paper / Project / Code / 机器之心 The first benchmark for manual-based appliance manipulation. |

|

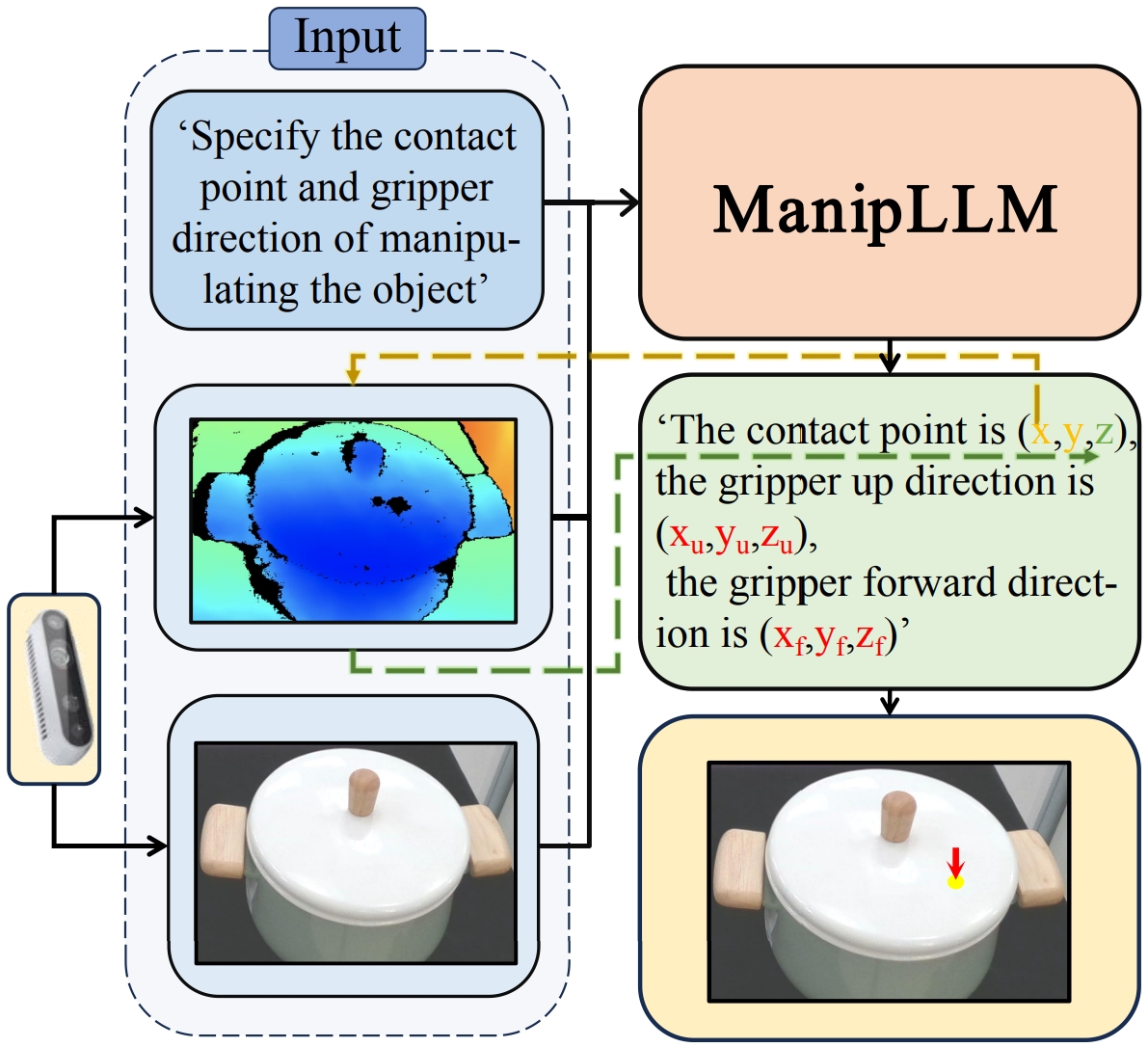

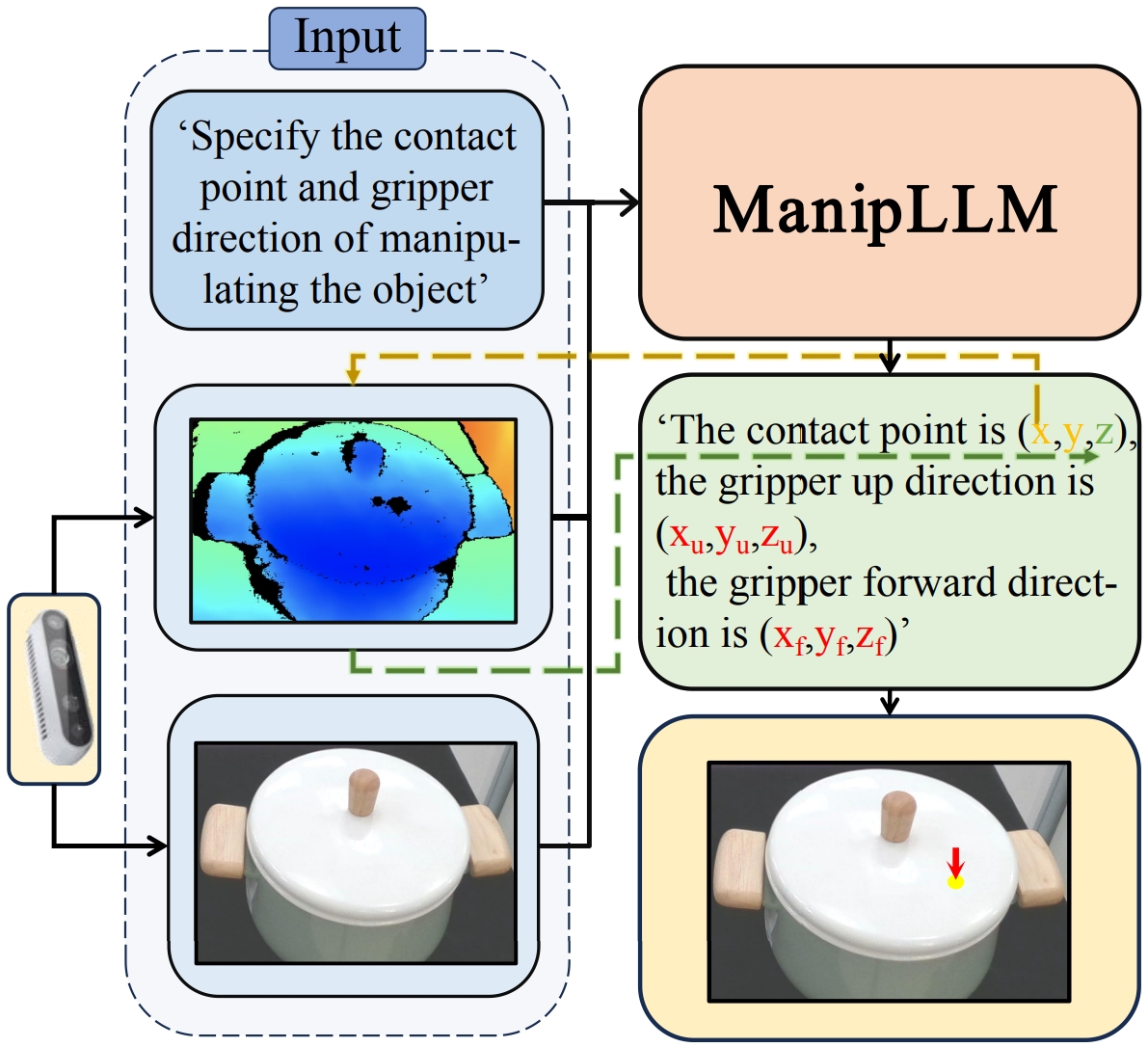

ManipLLM: Embodied Multimodal Large Language Model for Object-Centric Robotic Manipulation

Xiaoqi Li, Mingxu Zhang, Yiran Geng, Haoran Geng, Yuxing Long, Yan Shen, Renrui Zhang, Jiaming Liu, Hao Dong Conference on Computer Vision and Pattern Recognition (CVPR) 2024 Paper / Code |

|

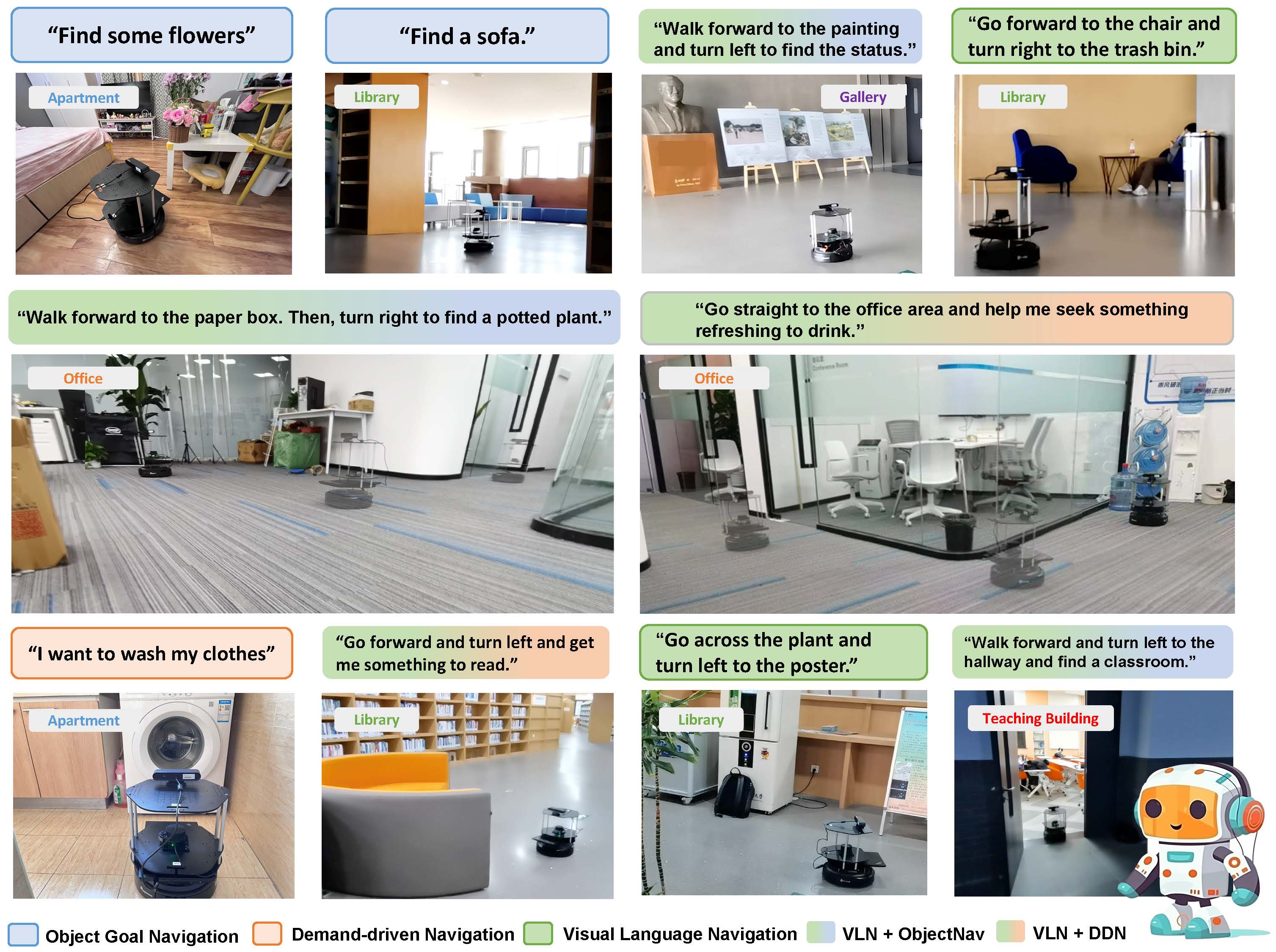

InstructNav: Zero-shot System for Generic Instruction Navigation in Unexplored Environment

Yuxing Long*, Wenzhe Cai*, Hongcheng Wang, Guanqi Zhan, Hao Dong Conference on Robot Learning (CoRL) 2024 Paper / Project / Code / 量子位 The first zero-shot generic instruction navigation system without any pre-built maps. |

|

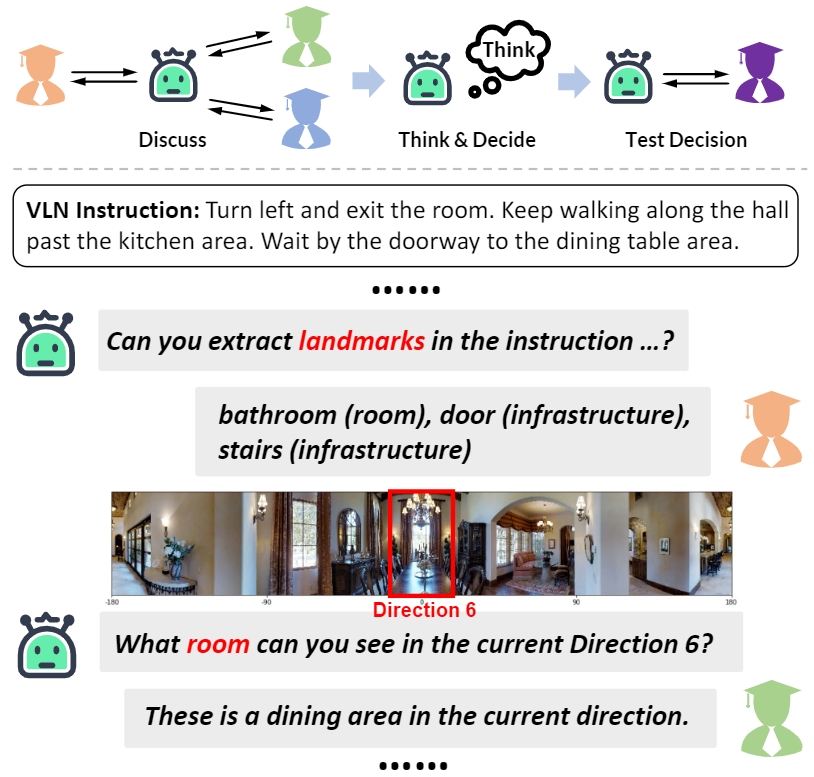

Discuss Before Moving: Visual Language Navigation via Multi-expert Discussions

Yuxing Long, Xiaoqi Li, Wenzhe Cai, Hao Dong International Conference on Robotics and Automation (ICRA) 2024 Paper / Project / Code / 量子位 DiscussNav agent actively discusses with multiple domain experts before moving. |

|

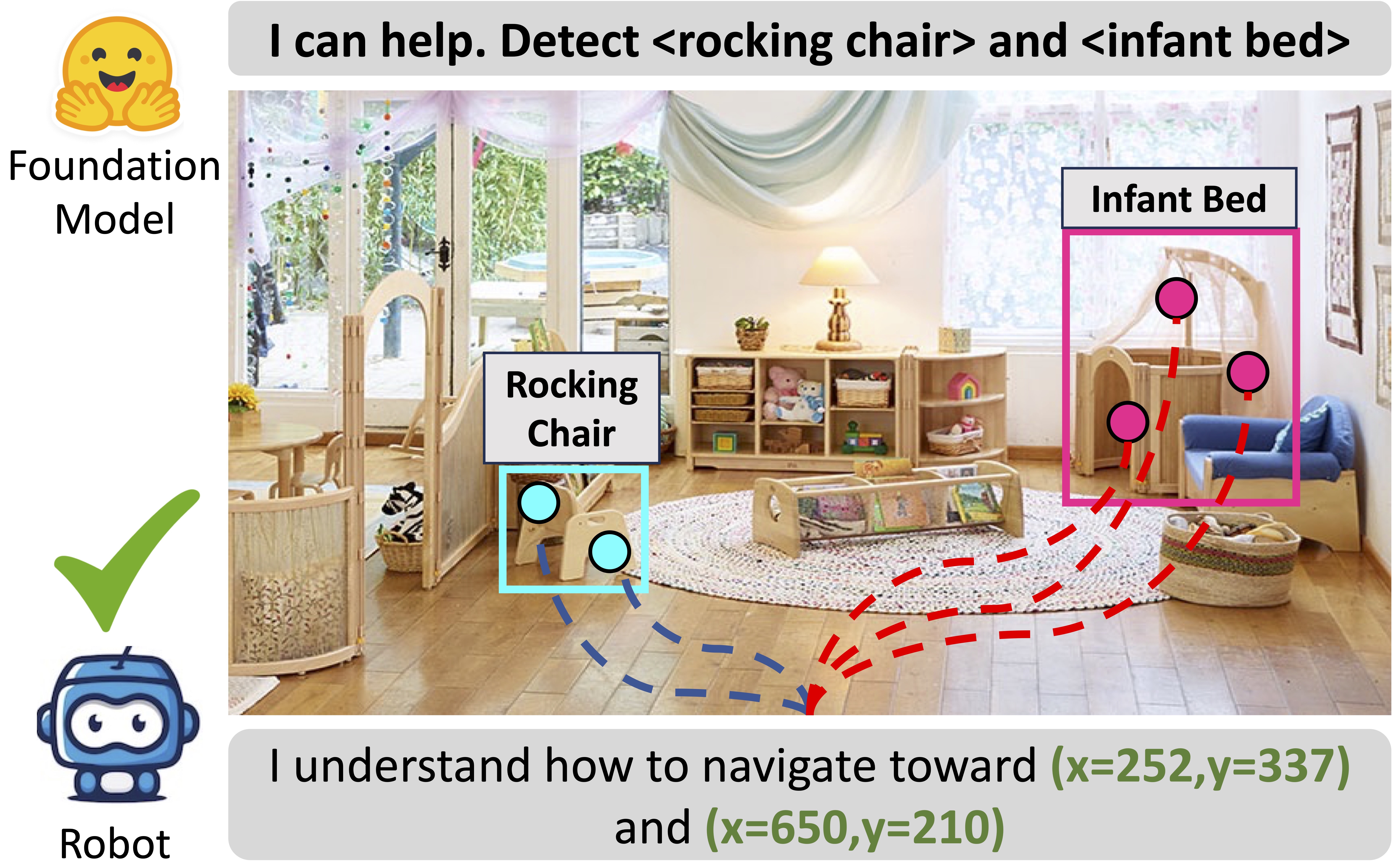

Bridging Zero-Shot Object Navigation and Foundation Models through Pixel-Guided Navigation Skill

Wenzhe Cai, Siyuan Huang, Guangran Cheng, Yuxing Long, Peng Gao, Changyin Sun, Hao Dong International Conference on Robotics and Automation (ICRA) 2024 Paper / Project / Code PixNav, a pure RGB-based navigation skill that uses a specified pixel as the goal and can navigate towards any object. |

|

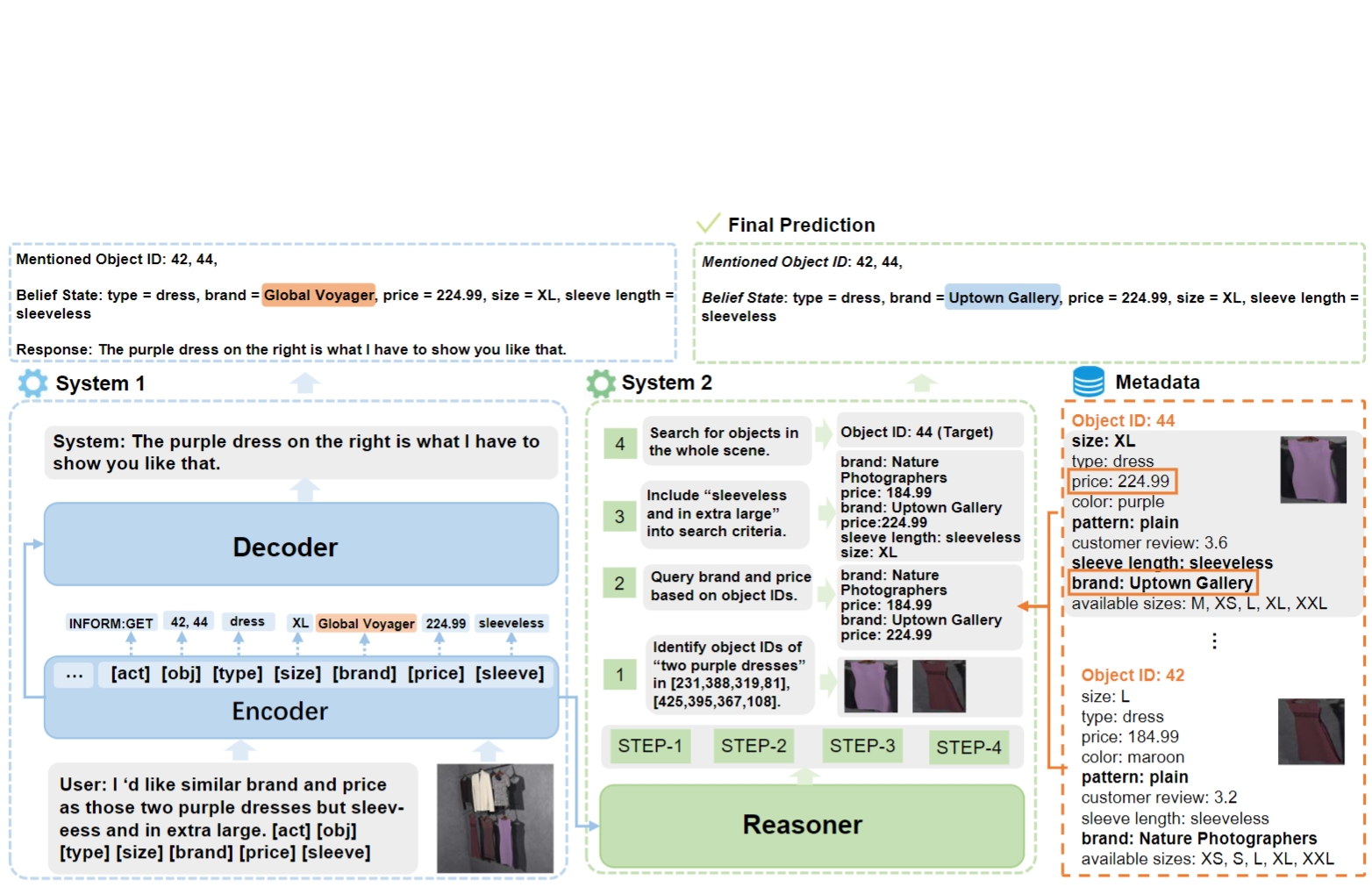

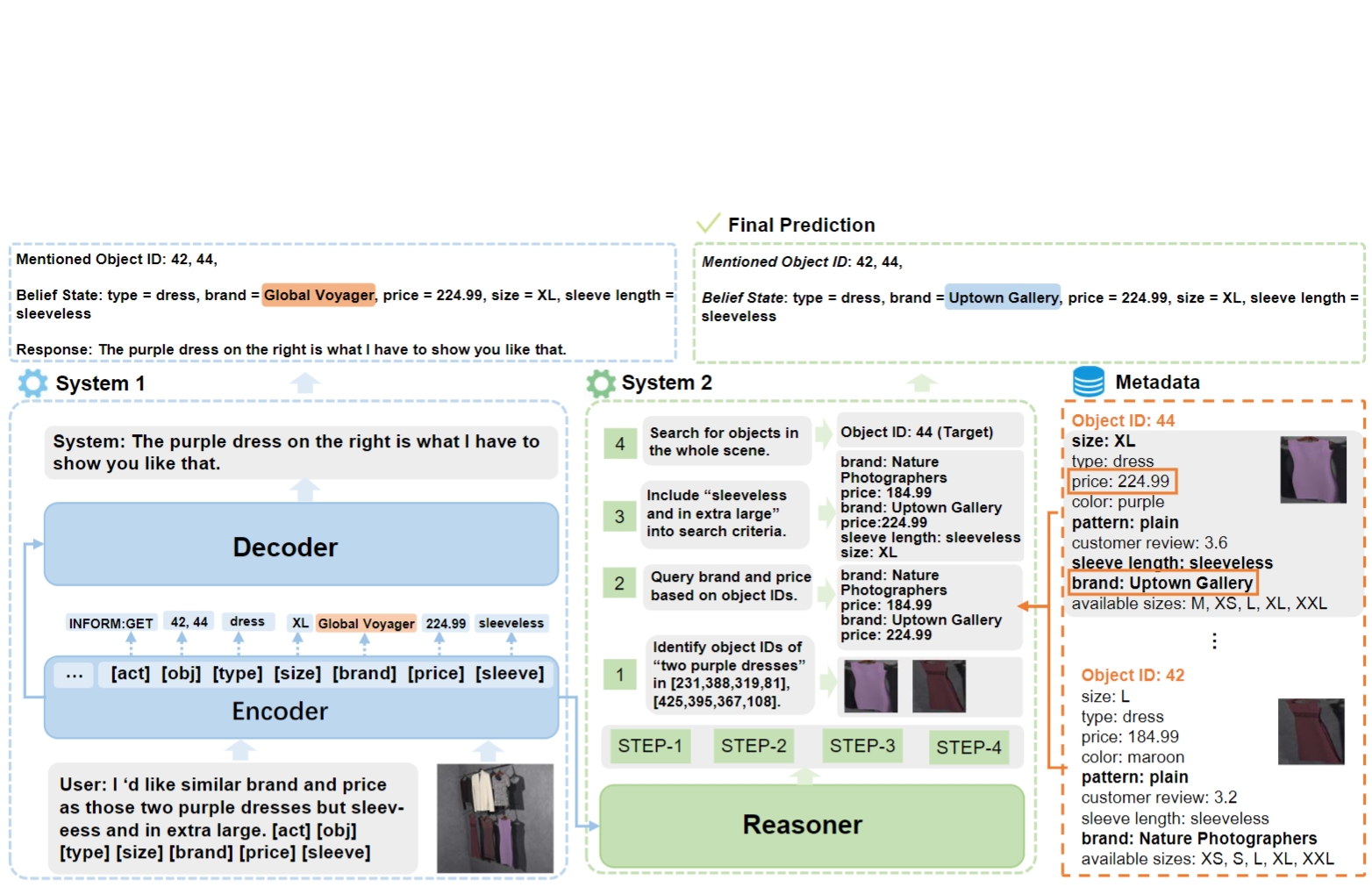

Improving Situated Conversational Agents with Step-by-Step Multi-modal Logic Reasoning

Yuxing Long*, Huibin Zhang*, Binyuan Hui*, Zhenglu Yang, Caixia Yuan, Xiaojie Wang, Fei Huang, Yongbin Li Champion of SIMMC 2.1 Competition, DSTC 11 Workshop (Best Paper) Paper / Code We propose a dual-system framework to conduct multimodal logic reasoning step-by-step. |

|

Whether you can locate or not? Interactive Referring Expression Generation

Fulong Ye, Yuxing Long, Fangxiang Feng, Xiaojie Wang ACM Multimedia (MM) 2023 Paper / Code We generate referring expressions by multi-round communications. |

|

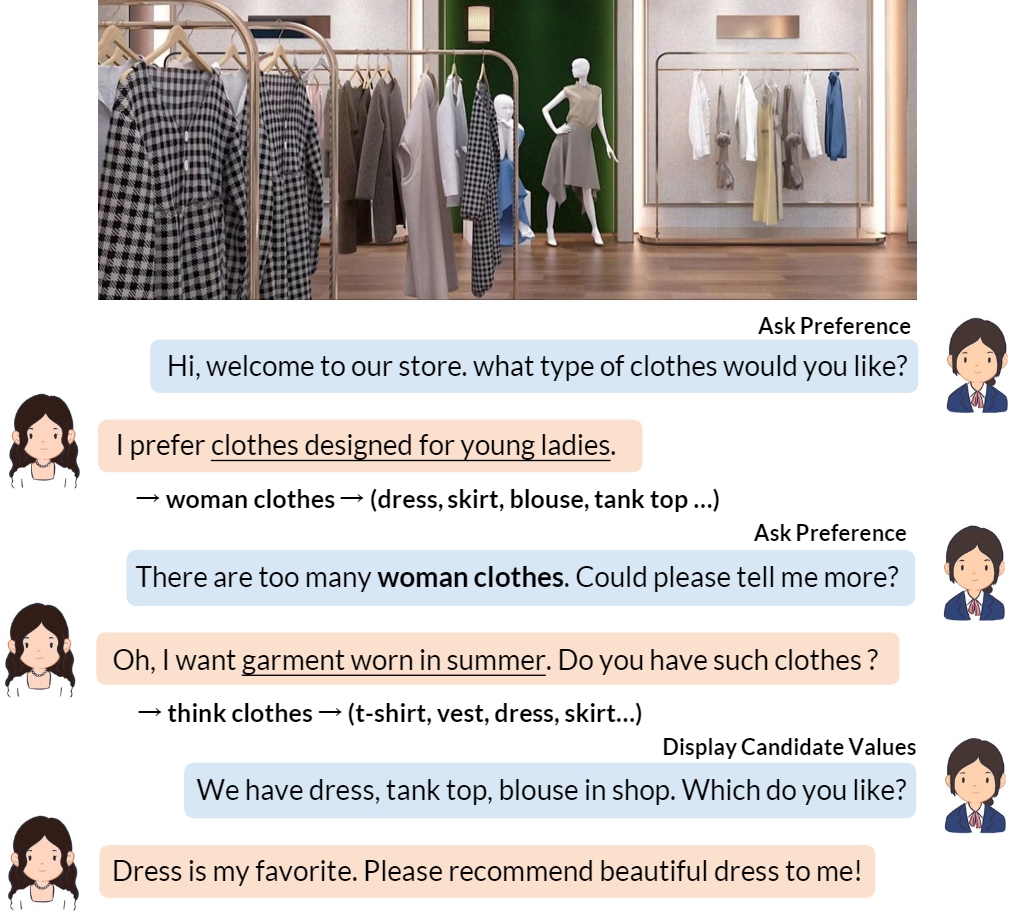

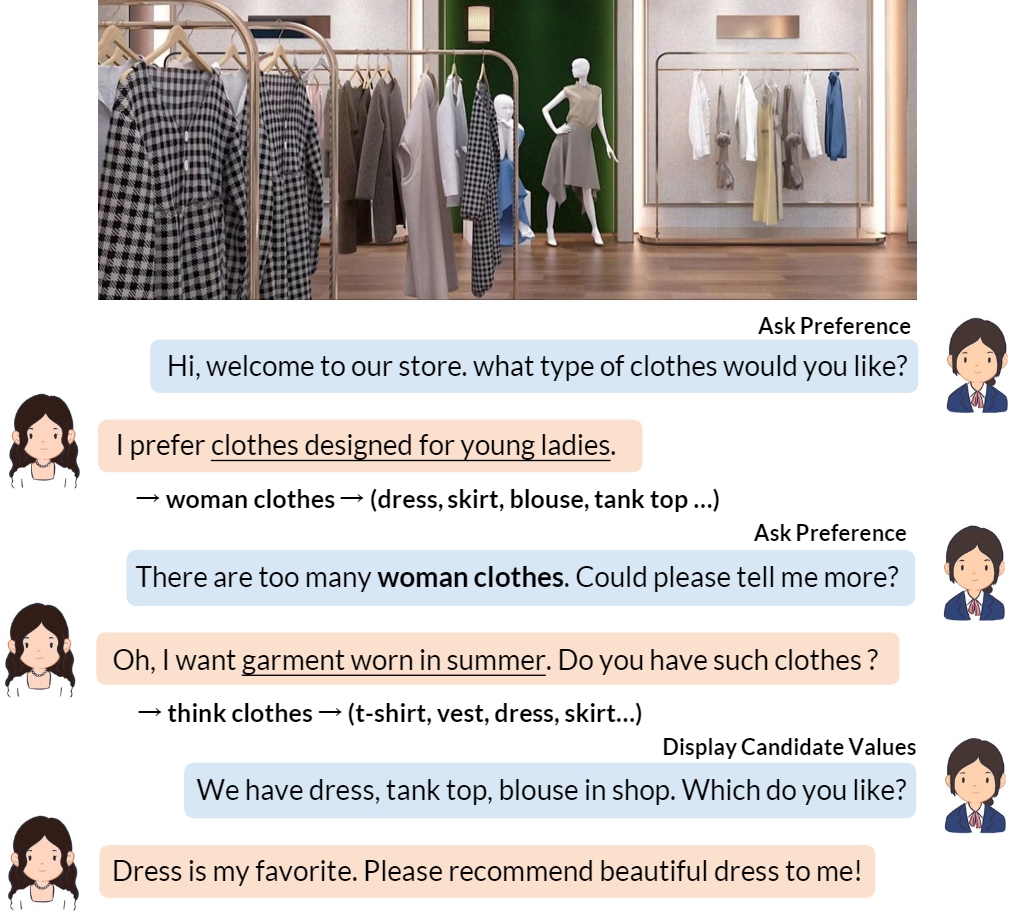

Multimodal Recommendation Dialog with Subjective Preference: A New Challenge and Benchmark

Yuxing Long, Binyuan Hui, Caixia Yuan, Fei Huang, Yongbin Li, Xiaojie Wang Findings of the Association for Computational Linguistics (Findings of ACL) 2023 Paper A new dataset for multimodal recommendation dialog with subjective preferences. |

|

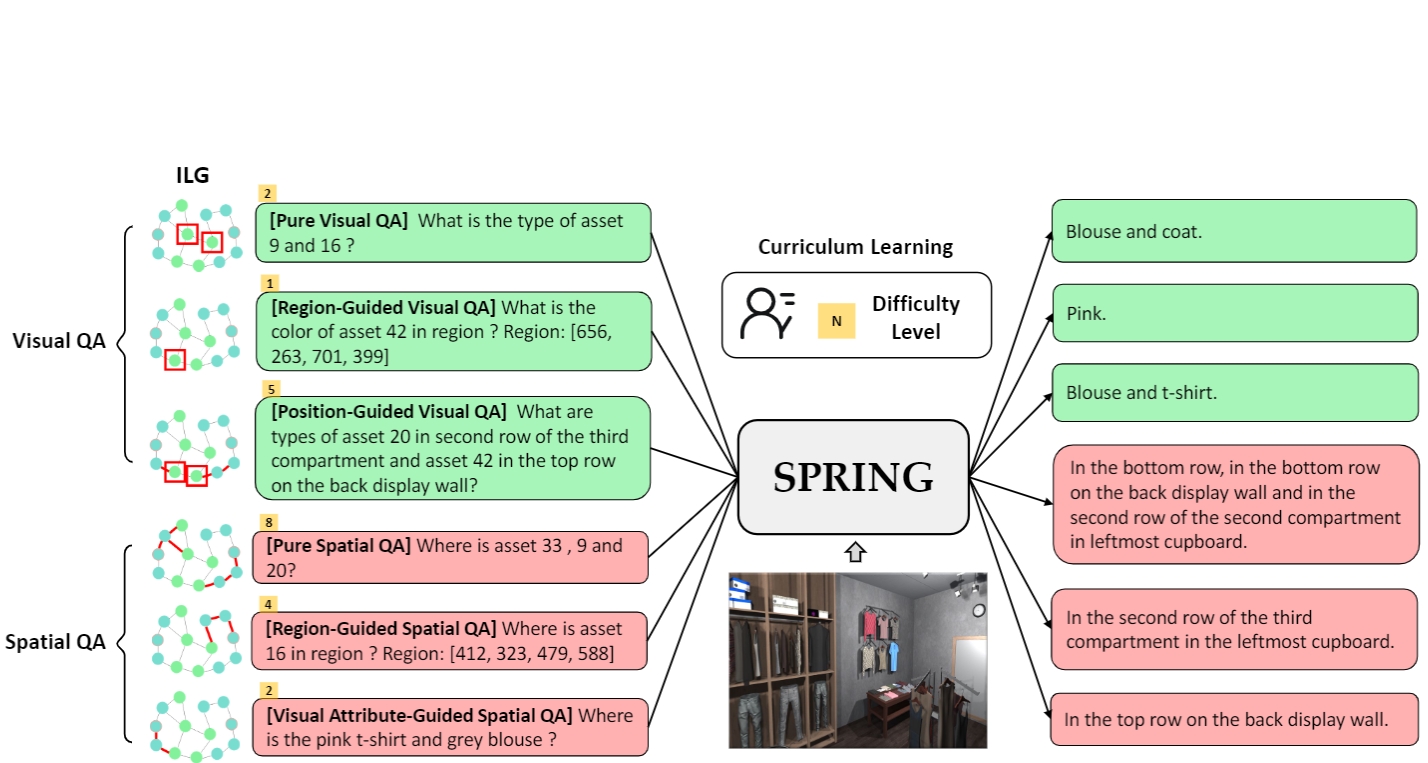

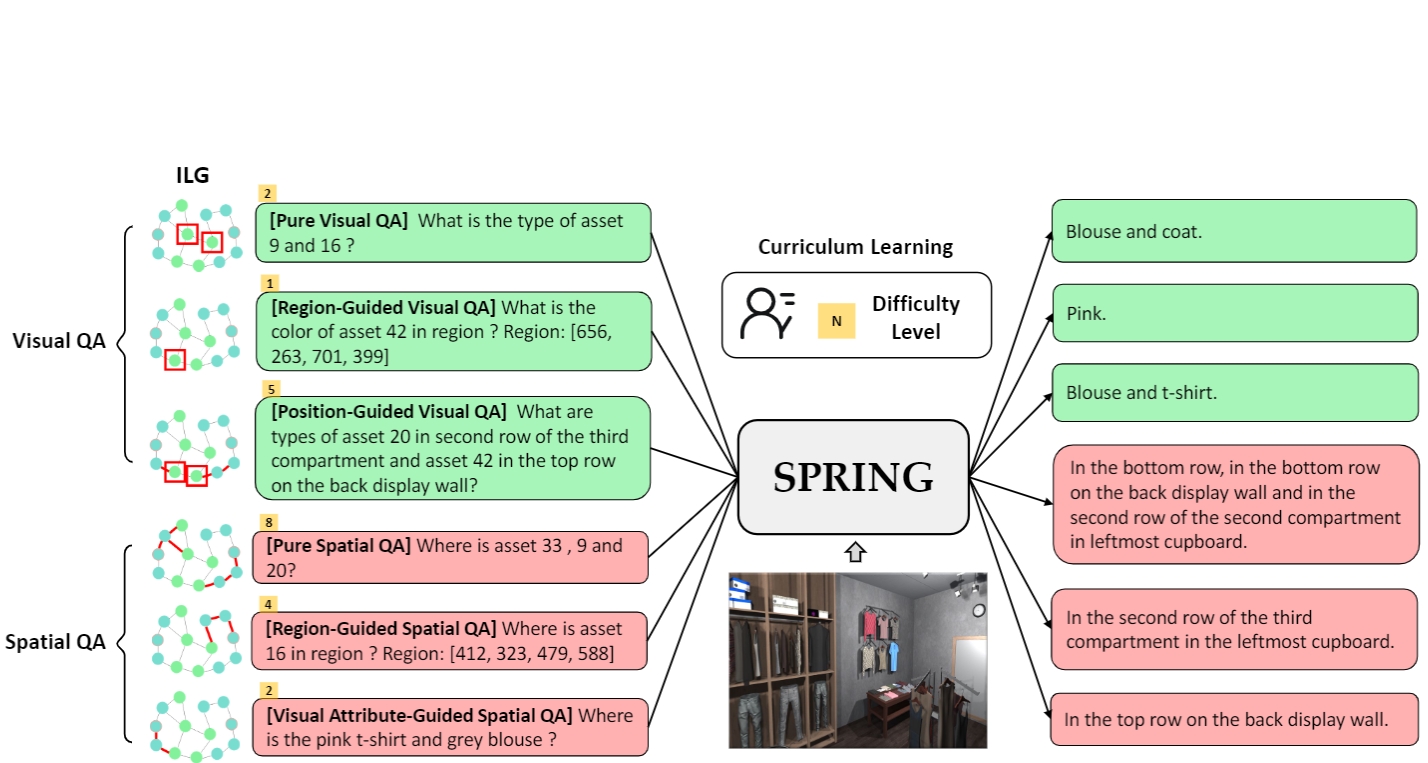

SPRING: Situated Conversation Agent Pretrained with Multimodal Questions from Incremental Layout Graph

Yuxing Long, Binyuan Hui, Fulong Ye, Yanyang Li, Zhuoxin Han, Caixia Yuan, Yongbin Li, Xiaojie Wang AAAI Conference on Artificial Intelligence 2023 (Oral) Paper / Code We improve the situated conversation agent through novel multimodal question-answering pretraining tasks. |

Services

Reviewer: RAL 2025

|

Selected Awards and Honors

Outstanding Master's Thesis of Beijing University of Posts and Telecommunications, 2024 |

|

|